JIT Trace

torch.jit.trace使用eager model和一個(gè)dummy input作為輸入,tracer會(huì)根據(jù)提供的model和input記錄數(shù)據(jù)在模型中的流動(dòng)過(guò)程,然后將整個(gè)模型轉(zhuǎn)換為TorchScript module。看一個(gè)具體的例子:

我們使用BERT(Bidirectional Encoder Representations from Transformers)作為例子。

from transformers import BertTokenizer, BertModel

import numpy as np

import torch

from time import perf_counter

def timer(f,*args):

start = perf_counter()

f(*args)

return (1000 * (perf_counter() - start))

# 加載bert model

native_model = BertModel.from_pretrained("bert-base-uncased")

# huggingface的API中,使用torchscript=True參數(shù)可以直接加載TorchScript model

script_model = BertModel.from_pretrained("bert-base-uncased", torchscript=True)

script_tokenizer = BertTokenizer.from_pretrained('bert-base-uncased', torchscript=True)

# Tokenizing input text

text = "[CLS] Who was Jim Henson ? [SEP] Jim Henson was a puppeteer [SEP]"

tokenized_text = script_tokenizer.tokenize(text)

# Masking one of the input tokens

masked_index = 8

tokenized_text[masked_index] = '[MASK]'

indexed_tokens = script_tokenizer.convert_tokens_to_ids(tokenized_text)

segments_ids = [0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1]

# Creating a dummy input

tokens_tensor = torch.tensor([indexed_tokens])

segments_tensors = torch.tensor([segments_ids])

然后分別在CPU和GPU上測(cè)試eager mode的pytorch推理速度。

# 在CPU上測(cè)試eager model推理性能

native_model.eval()

np.mean([timer(native_model,tokens_tensor,segments_tensors) for _ in range(100)])

# 在GPU上測(cè)試eager model推理性能

native_model = native_model.cuda()

native_model.eval()

tokens_tensor_gpu = tokens_tensor.cuda()

segments_tensors_gpu = segments_tensors.cuda()

np.mean([timer(native_model,tokens_tensor_gpu,segments_tensors_gpu) for _ in range(100)])

再分別在CPU和GPU上測(cè)試script mode的TorchScript模型的推理速度

# 在CPU上測(cè)試TorchScript性能

traced_model = torch.jit.trace(script_model, [tokens_tensor, segments_tensors])

# 因模型的trace時(shí),已經(jīng)包含了.eval()的行為,因此不必再去顯式調(diào)用model.eval()

np.mean([timer(traced_model,tokens_tensor,segments_tensors) for _ in range(100)])

# 在GPU上測(cè)試TorchScript的性能

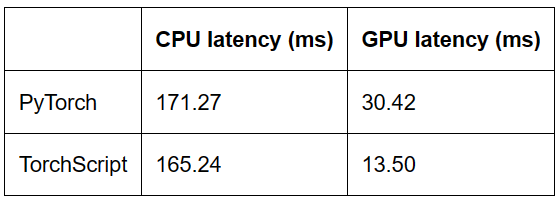

最終運(yùn)行結(jié)果如表

我使用的硬件規(guī)格是google colab,cpu是Intel(R) Xeon(R) CPU @ 2.00GHz,GPU是Tesla T4。

從結(jié)果來(lái)看,在CPU上,TorchScript比pytorch eager快了3.5%,在GPU上,TorchScript比pytorch快了55.6%。

然后我們?cè)儆肦esNet做一個(gè)測(cè)試。

import torchvision

import torch

from time import perf_counter

import numpy as np

def timer(f,*args):

start = perf_counter()

f(*args)

return (1000 * (perf_counter() - start))

# Pytorch cpu version

model_ft = torchvision.models.resnet18(pretrained=True)

model_ft.eval()

x_ft = torch.rand(1,3, 224,224)

print(f'pytorch cpu: {np.mean([timer(model_ft,x_ft) for _ in range(10)])}')

# Pytorch gpu version

model_ft_gpu = torchvision.models.resnet18(pretrained=True).cuda()

x_ft_gpu = x_ft.cuda()

model_ft_gpu.eval()

print(f'pytorch gpu: {np.mean([timer(model_ft_gpu,x_ft_gpu) for _ in range(10)])}')

# TorchScript cpu version

script_cell = torch.jit.script(model_ft, (x_ft))

print(f'torchscript cpu: {np.mean([timer(script_cell,x_ft) for _ in range(10)])}')

# TorchScript gpu version

script_cell_gpu = torch.jit.script(model_ft_gpu, (x_ft_gpu))

print(f'torchscript gpu: {np.mean([timer(script_cell_gpu,x_ft.cuda()) for _ in range(100)])}')

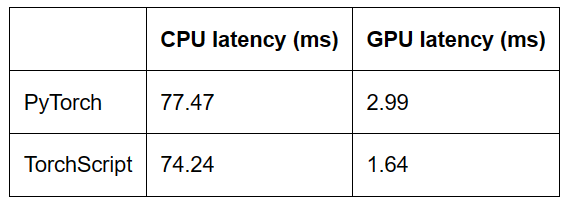

TorchScript相比PyTorch eager model,CPU性能提升4.2%,GPU性能提升45%。與Bert的結(jié)論一致。

-

cpu

+關(guān)注

關(guān)注

68文章

10889瀏覽量

212396 -

數(shù)據(jù)

+關(guān)注

關(guān)注

8文章

7102瀏覽量

89285 -

模型

+關(guān)注

關(guān)注

1文章

3279瀏覽量

48972

發(fā)布評(píng)論請(qǐng)先 登錄

相關(guān)推薦

Java開(kāi)發(fā):Web開(kāi)發(fā)模式——ModelⅠ#Java

Java開(kāi)發(fā):Web開(kāi)發(fā)模式——ModelⅡ#Java

PSpice如何利用Model Editor建立模擬用的Model

IC設(shè)計(jì)基礎(chǔ):說(shuō)說(shuō)wire load model

Model B的幾個(gè)PCB版本

Model3電機(jī)是什么

Cycle Model Studio 9.2版用戶手冊(cè)

性能全面升級(jí)的特斯拉Model S/Model X到來(lái)

Model Y車型類似Model3 但續(xù)航里程會(huì)低于Model3

TorchScript model與eager model的性能區(qū)別

TorchScript model與eager model的性能區(qū)別

評(píng)論